Sol is Lehigh's newest Linux cluster replacing Corona and other ancillary Level 2 resources. Following our tradition of naming high performance computing clusters after stars or celestial phenomena, Sol is named after the nearest star.

Acknowledgement

In publications, reports, and presentations that utilize Sol, Hawk and Ceph, please acknowledge Lehigh University using the following statement:

"Portions of this research were conducted on Lehigh University's Research Computing infrastructure partially supported by NSF Award 2019035"

Sol is a heterogeneous cluster launched on Oct 1, 2016 with a total of 34 nodes, 26 are Condo investments by two CAS faculty. All nodes provide 500GB scratch storage for running jobs and are interconnected with 2:1 oversubscribed EDR (100Gbps) Infiniband fabric. In Fall 2018, a new Ceph storage cluster was installed that provides a 11TB CephFS global scratch space for storing temporary data for 7 days after completion of jobs.

Upgrades by Condo Investments

- In Jan. 2017, each of the 25 Condo nodes were upgraded to include two GTX 1080 GPU cards.

- In 2017, Condo Investments from RCEAS and CBE faculty added 22 nodes and 16 nVIDIA GTX 1080 GPU cards.

- In 2018, Condo Investments from RCEAS and CAS faculty added 24 nodes and 48 nVIDIA RTX 2080 TI GPU cards.

- In Mar. 2019, Condo Investments from RCEAS faculty added 1 node.

- In May-September 2020, Condo Investments from CAS, RCEAS and COH faculty added 8 nodes.

- In Spring 2022, Condo Investments from CAS and RCEAS faculty added 4 nodes.

As of Feb. 2022

Processor Type | Number of Nodes | Number of CPUs | Number of GPUs | CPU Memory (GB) | GPU Memory (GB) | CPU TFLOPs | GPU TFLOPs | Annual SUs |

|---|---|---|---|---|---|---|---|---|

2.3 GHz E5-2650v3 | 9 | 180 | 10 | 1152 | 80 | 5.76 | 2.57 | 1,576,800 |

2.3 GHz E5-2670v3 | 33 | 792 | 62 | 4224 | 496 | 25.344 | 15.934 | 6,937,920 |

2.2 GHz E5-2650v4 | 14 | 336 | 896 | 9.6768 | 2,943,360 | |||

2.6 GHz E5-2640v3 | 1 | 16 | 512 | 0.5632 | 140,160 | |||

2.3 GHz Gold 6140 | 24 | 864 | 48 | 4608 | 528 | 41.472 | 18.392 | 7,568,640 |

| 2.6 GHz Gold 6240 | 6 | 216 | 1152 | 10.368 | 1,892,160 | |||

| 2.1 GHz Gold 6230R | 2 | 104 | 768 | 4.3264 | 911,040 | |||

| 3.0 GHz Gold 6348R | 1 | 48 | 5 | 192 | 200 | 3.072 | 48.5 | 420,480 |

3.0GHz EPYC 7302 (Coming Soon) | 3 | 96 | 24 | 768 | 1152 | 4.3008 | 37.44 | 840,960 |

93 | 2652 | 149 | 14272 | 2456 | 104.8832 | 121.9964 | 23,231,520 |

System Configuration

Intel XEON processors AVX2 and AVX512 frequencies

- https://www.microway.com/knowledge-center-articles/detailed-specifications-intel-xeon-e5-2600v3-haswell-ep-processors/

- https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-e5-v3-spec-update.pdf

- https://www.microway.com/knowledge-center-articles/detailed-specifications-of-the-intel-xeon-e5-2600v4-broadwell-ep-processors/

- https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-e5-v4-spec-update.pdf

- https://www.microway.com/knowledge-center-articles/detailed-specifications-of-the-skylake-sp-intel-xeon-processor-scalable-family-cpus/

- https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf

- https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/2nd-gen-xeon-scalable-spec-update.pdf

Condo Investments

Sol is designed to be expanded via the Condo Investment program. Faculty, Departments or Colleges can invest in Sol by purchasing nodes that will increase the overall capacity. Investors will be provided with an annual access equivalent to the amount of computing core hours or service units (SU) corresponding to their investment that can be shared with collaborators (students, postdocs and other faculty) for a 5 year period (or length of hardware warranty). Sol users, Condo and Hotel Investors, can utilize all available nodes provided their allocations have not been consumed.

Current Investors

Dimitrios Vavylonis, Department of Physics: 1 20-core compute node

Annual allocation: 175,200 SUs

Wonpil Im, Department of Biological Sciences:

25 24-core compute node with 2 GTX 1080 cards per node (5,256,000 SUs)

12 36-core compute nodes with 4 RTX 2080 cards per node (3,784,320 SUs)

expected April 2022: 3 32-core compute nodes with 8 A40 GPUs per node (840960 SUs)

Total Annual allocation: 9,881,280 SUs

Anand Jagota, Department of Chemical Engineering: 1 24-core compute node

Annual allocation: 210,240 SUs

Brian Chen, Department of Computer Science and Engineering:

- 1 24-core compute node (210,240 SUs)

- 2 52-core compute nodes (911,040 SUs)

Annual allocation: 1,1212,280 SUs

Edmund Webb III & Alparslan Oztekin, Department of Mechanical Engineering and Mechanics: 6 24-core compute node

Annual allocation: 1,261,440 SUs

Jeetain Mittal & Srinivas Rangarajan, Department of Chemical Engineering: 13 24-core Broadwell based compute node and 16 GTX 1080 cards

Annual allocation: 2,733,120 SUs

Seth Richards-Shubik, Department of Economics

Annual allocation: 140,160 SUs

Ganesh Balasubramanian, Department of Mechanical Engineering and Mechanics: 7 36-core Skylake based compute node

Annual allocation: 2,207,520 SUs

Department of Industrial and Systems Engineering: 2 36-core Skylake based compute node

Annual allocation: 630,720 SUs

Lisa Fredin, Department of Chemistry:

2 36-core Skylake based compute node

- 4 36-core Cascade Lake based compute node

Annual allocation: 1,892,160 SUs

Paolo Bocchini, Department of Civil and Environmental Engineering: 1 24-core Broadwell based compute node

Annual Allocation: 210,240 SUs

Hannah Dailey, Department of Mechanical Engineering and Mechanics: 1 36-core Skylake based compute node

Annual allocation: 315,360 SUs

- College of Health: 2 36-core Cascade Lake based compute node

- Annual allocation: 630,720 SUs

- Keith Moored, Department of Mechanical Engineering and Mechanics: 1 48-core Cascade Lake Refresh compute node with 5 A100 GPUs

- Annual allocation: 420,480 SUs

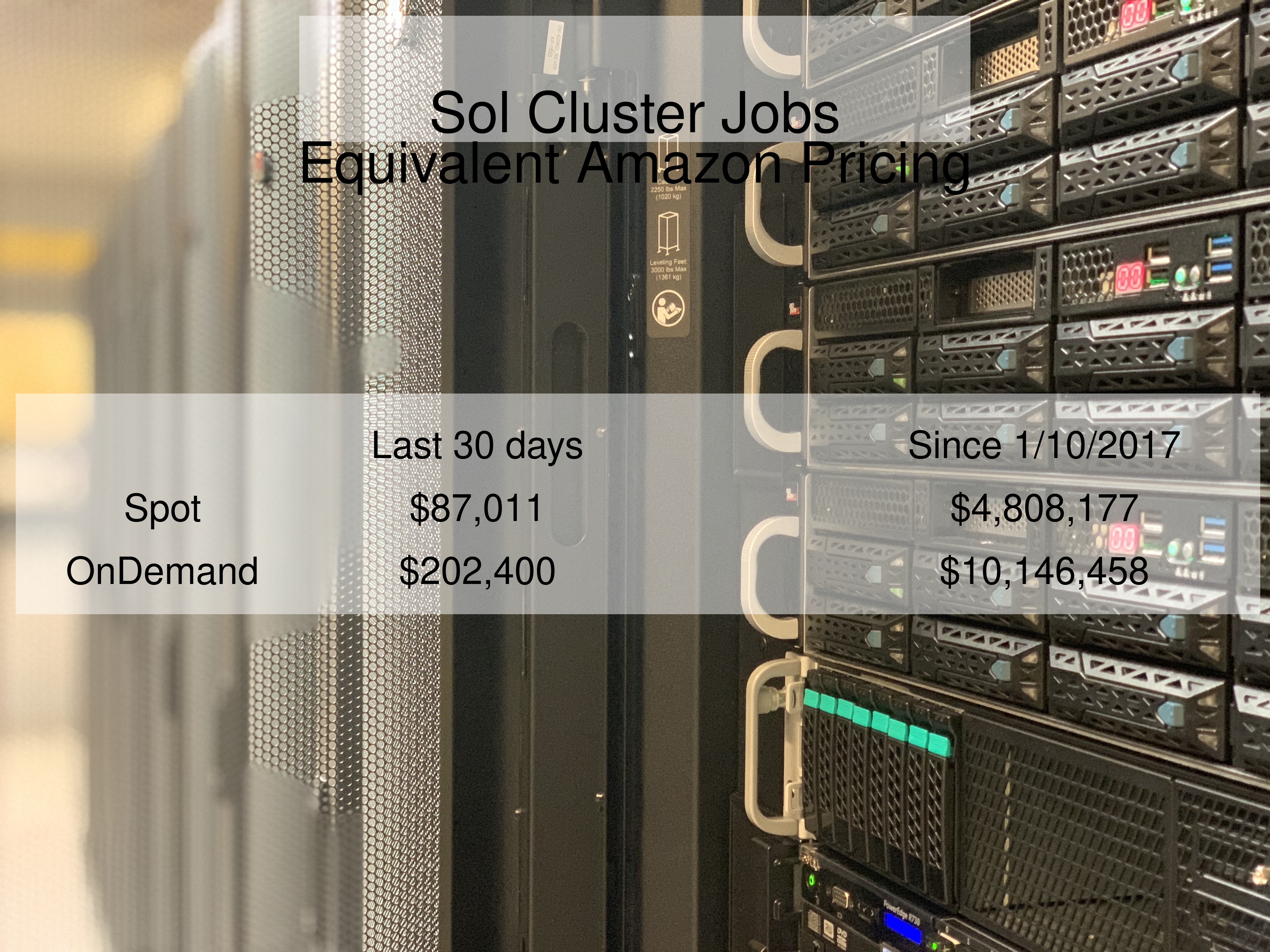

Comparison with AWS

Methods/Notes:

Adapted from the code at https://gitlab.beocat.ksu.edu/Admin-Public/amazon-cost-comparison

Costs for Spot/On-Demand pricing last updated: August 6, 2020 from https://aws.amazon.com/ec2/spot/pricing. These costs fluctuate and Spot pricing requires that users accept job-preemption; no jobs on Sol are currently preemptable.

Amazon instance types selected were those which conform to Sol job sizes regarding memory, core counts, and GPUs. These are, g4dn.x-12xlarge, p3.8xlarge, c5n.large-18xlarge, and r5.8-16xlarge. Sizing and run time of actual Sol jobs from Slurm's internal database were used to match instance types to generate a cost for each job run on Sol.

Costs for AWS do not include data storage, networking, VPN/transit, data ingress/egress, or any other charges aside from the EC2 instance price. The code calculates cost as per-second even if the job ran for less than one hour, and assumes that since an Amazon VCPU is half a core, a job will take twice as long on Amazon as on Sol.

Accounts & Allocations

For accessing Sol, please see revised policy for Accounts & Allocations

Logging into Sol

Sol can be accessed via SSH using a SSH Client. Linux and Mac users can login to Sol by entering the following command in a terminal:

ssh username@sol.cc.lehigh.edu

If you are off campus, then there are two options

- Start a vpn session and then login to Sol using the ssh command above

- Use ssh gateway as a jump host first and then login to Sol using the above ssh command on the ssh gateway prompt. If your ssh is from the latest version of openssh, then you can use the following command

ssh -J username@ssh.cc.lehigh.edu username@sol.cc.lehigh.edu

If you are using the ssh gateway, you might want to add the following to your ${HOME}/.ssh/config file on your local system

Host *ssh HostName ssh.cc.lehigh.edu Port 22 # This is an example - replace alp514 with your Lehigh ID User alp514 Host *sol HostName sol.cc.lehigh.edu Port 22 User <LehighID> # Use this if the next command doesn't work ProxyCommand ssh ssh nc %h %p ProxyCommand ssh -W %h:%p ssh

to simplify the ssh and scp (for file transfer) command. You will be prompted for your password twice - first for ssh and then for sol

ssh sol scp sol:<path to source directory>/filename <path to destination directory>/filename

If you are using public key authentication, please add a passphrase to your key. Passwordless authentication is a security risk. Use ssh-agent and ssh-add to manage your public keys. See https://kb.iu.edu/d/aeww for details.

Windows users will need to install a SSH Client to access Sol. Lehigh Research Computing recommends MobaXterm since it can be configured to use the SSH Gateway as jump host. DUO Authentication is activated for faculty and staff on the SSH Gateway. If a window pops up for password enter your Lehigh password. The second pop up is for DUO, it only says DUO Login. Enter 1 for Push to DUO or 2 for call to registered phone.